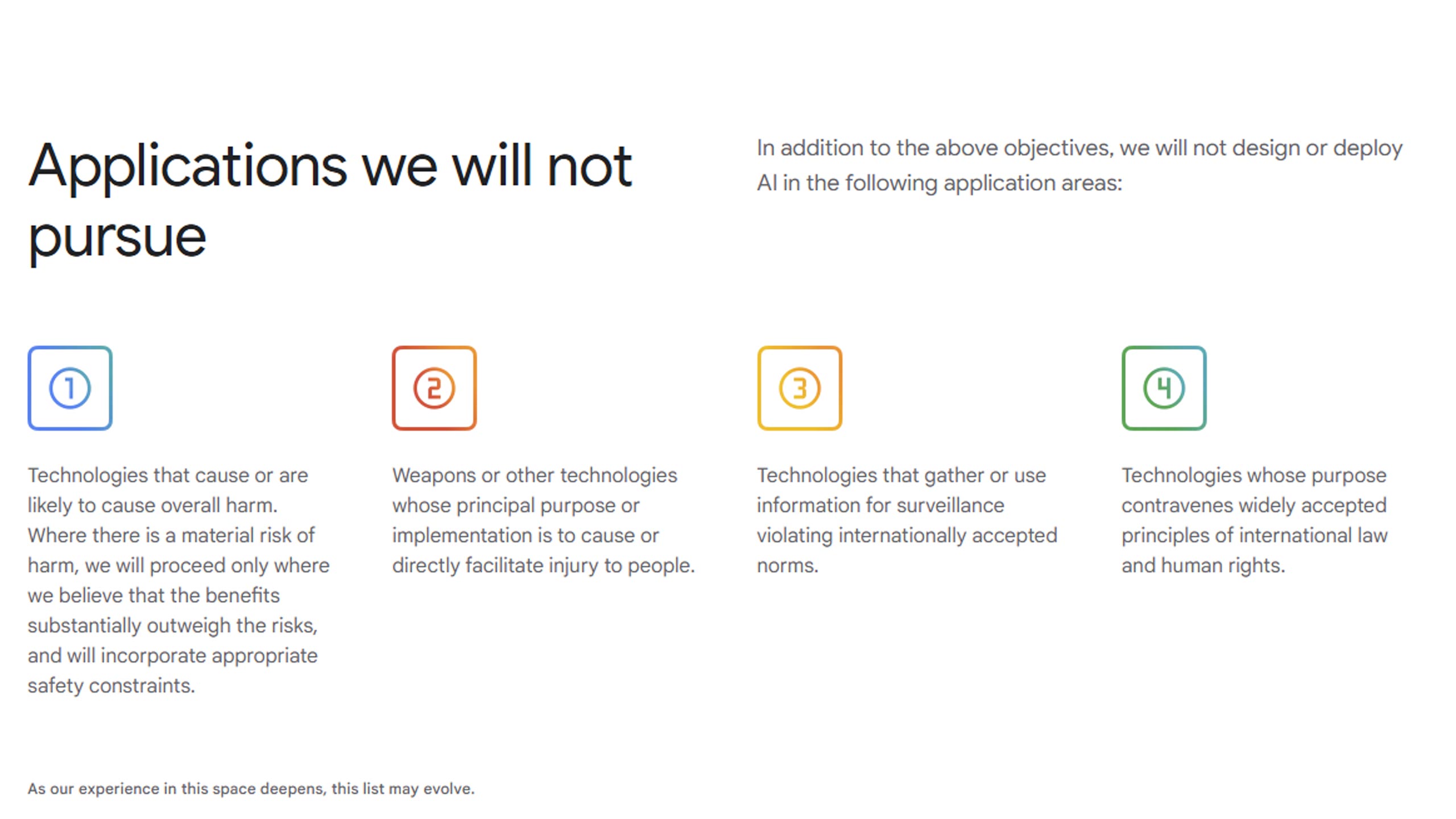

Google has made one of the most durable changes in these Principles of AI since the first publishing of them in 2018. In a change seen by The Washington PostThe giant search has edited the document to remove the promises made by this promise that it is not "Design oi -Deploy" AI tools for use in weapons or tracking technology. In the past, those guidelines include a section titled "Applications we will not pursue," that is not present in the current version of the document.

Rather, there is now a section titled "Responsible development and expansion." There, Google said it would be implemented "Applicable human administration, appropriate diligence, and feedback mechanisms to align with user goals, social responsibility, and widely accepted principles of international law and human rights."

That's a broader promise than the company's specifically made lately at the end of last month when the previous version of AI principles still live on its website. For example, because it relates to weapons, the company said it would not design AI for use in "weapons or other technologies whose primary purpose or implementation is to cause or directly facilitate harm to people. “As for the AI tracking tools, the company said it would not develop tech violating "Customs accepted in international."

When asked by a comment, a Google spokesman pointed out the Engadget to a Blog post The company published on Thursday. In it, the CEO of Deepmind Demis Hassabis and James Dianic, Senior Vice President of Research, LABS, Technology and Society on Google, says AI's emergence as A "General-purpose technology" Required by a policy change.

"We believe that democracy should lead to the development of AI, guided by major values such as freedom, equality, and respect for human rights. And we believe that companies, governments, and organizations that share these values should work together to create AI that protects people, promotes global growth, and supports national security," The two wrote. "… guided by our AI principles, we will continue to focus on the research and applications of AI aligned with our mission, our scientific focus, and our areas of expertise, and remain consistent with the widely accepted Principles of international law and human rights – always evaluating the specific work by careful assessment if the benefits are more than potential risks."

When Google first published its AI principles in 2018, it was made after the Project Maven. This is a controversial government contract that, if Google has decided to renew it, it will be seen that the company provides AI's software to the Department of Defense for Drone footage review. Dose -Docated Google employees Stopped at the company In protest of the contract, with thousands more Signing an opposition petition. When Google published its new rules, CEO Sundar Pichai said "the test of time."

By 2021, however, Google started chasing military contracts, with what was reported a "aggressive" bid For joint contract of cloud cloud cloud of pentagon. At the start of this person, The Washington Post reported that Google employees have repeatedly worked in the Israeli defense ministry at Expand the government's use of AI tools.

This article originally appeared at the Engadget at https://www.engadget.com/ai/google-win-kinks-it-ok-to-use-use-i-fix-reapons-and-surveillance-224824373.html? SRC = RSSS