California's SB 1047 Set, the country's most controversial AI's Ai Ai Bill of 2024, returned to a new AI bill that could shake the Silicon Valley.

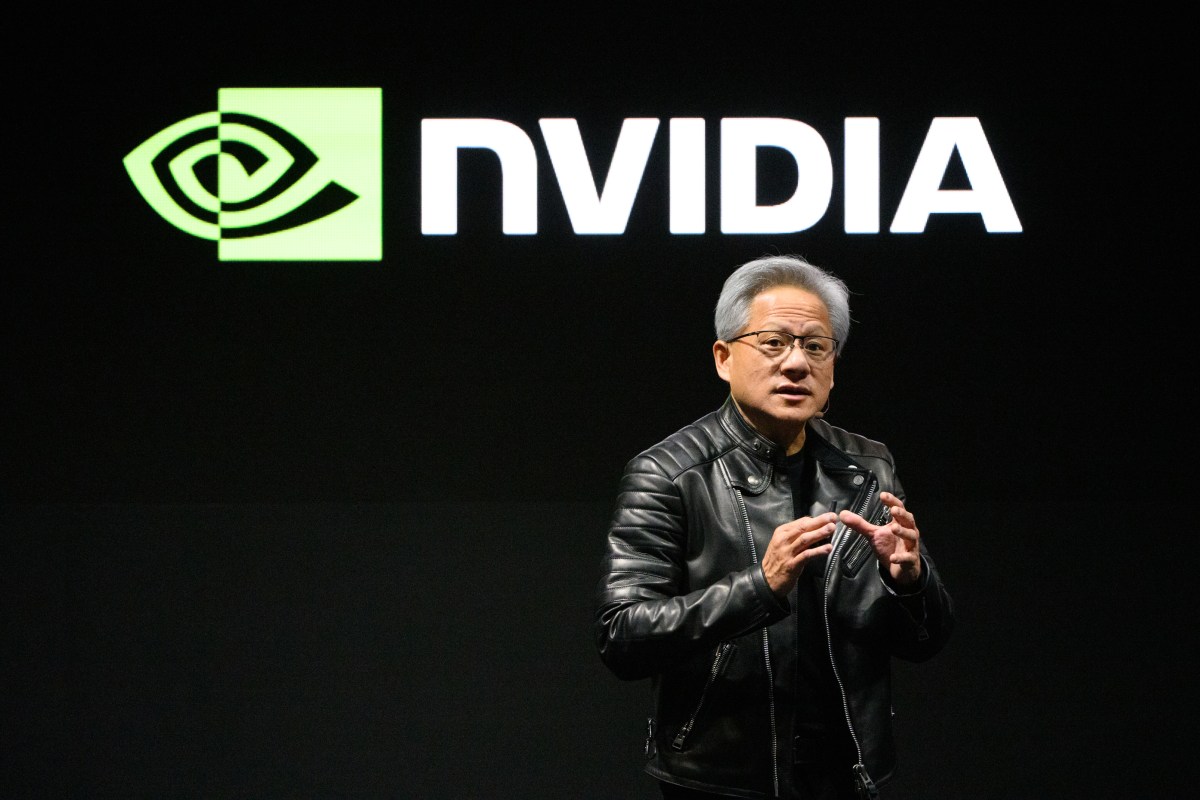

California state senator introduced Scott Wiener A New bill On Friday that will protect employees in the leading AI Labs, allowing them to speak if they think their company's AI systems can be a “critical risk” in society. The new bill, SB 53, will also create a public cloud of cloud computing, called Calcompute, to give researchers and startups of the necessary computing resources to form the AI that benefits the public.

Wiener's last AI Bill, California's SB 1047, has caused a vibrant debate nationwide around how to handle massive AI systems that can cause disasters. SB 1047 is aimed at avoid the possibility of massive AI models that create catastrophic disasterssuch as the cause of loss of life or cyberattacks worth more than $ 500 million in injuries. However, Governor Gavin Newsom later issued a bill in September, saying SB 1047 is not the best approach.

But the debate on SB 1047 quickly became ugly. Some Silicon Valley leaders say SB 1047 will hurt America's competitive edge In the global AI race, and claim that the bill is inspired by the unrealistic fear that AI systems can cause scenarios like fiction -like scenarios. Meanwhile, Senator Wiener said some adventure capitalists are engaged in a “Propaganda Campaign” against his billpointing to the part of Y Combinator's claim that SB 1047 will send the founders of startup in jail, a claim to the claimant arguing is misleading.

SB 53 is essentially taking at least the controversial parts of SB 1047 – such as Whistleblower protections and the establishment of a Calcompute cluster – and re -repacking them to a new AI bill.

Noteworthy, the wiener does not avoid the existing AI risk in SB 53. The new bill specifically protects whistleblowers who believe that their employers are creating AI systems that bring to a “critical risk.” The bill defined the critical risk as a “It is evident or at risk that a developer, or expanding, or expanding a developer of a foundation model, as defined, will result in the death of, or serious injury to, more than 100 people, or more than $ 1 billion injuries to money rights or ownership rights. “

SB 53 limits the developers of the Fronier AI model – likely to include Openai, Anthropic, and Xai, among others – from revenge against employees who reveal about information to California general lawyer, federal authority, or other employees. Under the bill, these developers are required to report back to whistleblowers in some internal processes that the whistleblowers have found.

As for the Calcompute, SB 53 will establish a group to produce a public cloud computing cluster. The group is made up of representatives of the University of California, as well as other public and private researchers. This will make recommendations for how to build a calcompute, how much cluster, and which users and organizations should have access to it.

Of course, it is early in the legislative process for SB 53. It is necessary to check the bill and pass by California's legislative bodies before it reaches the Governor Newsom's desk. State lawmakers will surely wait for the Silicon Valley reaction to SB 53.

However, 2025 could be a more difficult year to pass AI safety bills compared to 2024. California passed 18 fees associated with AI in 2024, but now as if Ai Doom's movement loses its ground.

Vice President JD Vance signed at the Paris Ai Action Summit that America was not interested in AI's safety, but instead puts the AI change. While the Calcompute cluster established by SB 53 is sure to be seen in advance of AI development, it is unclear how legislative efforts around the existing AI risk will be fares in 2025.